If you mention testing to anyone with a basic understanding of software engineering, they're likely to think about unit testing. This was certainly true of a podcast I was listening to the other day, where their "introduction to testing" discussion boiled down to how they understand the value of writing unit tests and should write them, but they don't...

The real value of testing goes beyond writing unit tests.

Testing code increases (design) quality

When most people think about good software design, they'll think of design patterns, principles like SOLID, and code smells. Writing tests can be a good way of highlighting violations in good software design. This is because the process of writing tests can highlight the friction points in code that good software design often overcomes.

Most, newly written code is written to satisfy a single use-case or scenario. Writing tests for your new code will give you a second scenario in which your code is being utilised, and this can help you think more broadly about how the code under test will be used, and any friction points or design concerns in your code.

Example: Inject Dependencies / Single Responsibility

Let's say you're testing a new class, and you're having to deal with the fact that testing your new code is involving having to test another class. This could indicate that this is a dependency of your class that should be dependency injected. You could also argue that this violates the Single Responsibility Principle too.

public class NotificationService

{

public void NewCustomer(Customer customer)

{

var msg = $"You have a new customer called: {customer.Name}";

var smpt = new SmtpClient("smtp.example.com", 25);

var smtpMessage = new SmtpMessag();

smtpMessage.Subject = "New customer";

smtpMessage.To = "bob@example.com";

smtpMessage.Body = msg;

smpt.Send(smtpMessage);

}

}In testing this NotificationService class you're also being forced to test SmtpClient. Therefore increasing the complexity of the NotificationService tests. If we want to be more "Single Responsibility", the email sending functionality should be separated out into its own class (say, EmailSender). And if we dependency inject it into NotificationService, we then have the choice when writing tests to mock out EmailSender and replace it with a fake object. In short, mocks can be used to ensure that you're not always having to test other objects. The real object is replaced with a mock that is told to behave in a certain way for the purpose of the test.

Here is an alternate implementation that is more respectful of the Single Responsibility Principle:

public class EmailSender

{

public void Send(string to, string message)

{

// TODO: SmtpClient logic for composing SmtpMessage and sending

}

}

public class NotificationService

{

private readonly EmailSender _emailSender;

// For testing, we can just inject a mock EmailSender

public NotificationService(EmailSender emailSender)

{

_emailSender = emailSender;

}

public void NewCustomer(Customer customer)

{

var msg = $"You have a new customer called: {customer.Name}";

// For testing, we can just check that EmailSender is

// called with the right arguments. Testing that the

// email sends is the responsibility of the tests

// for EmailSender

_emailSender.Send("bob@example.com", msg);

}

}There is more to testing than just unit tests

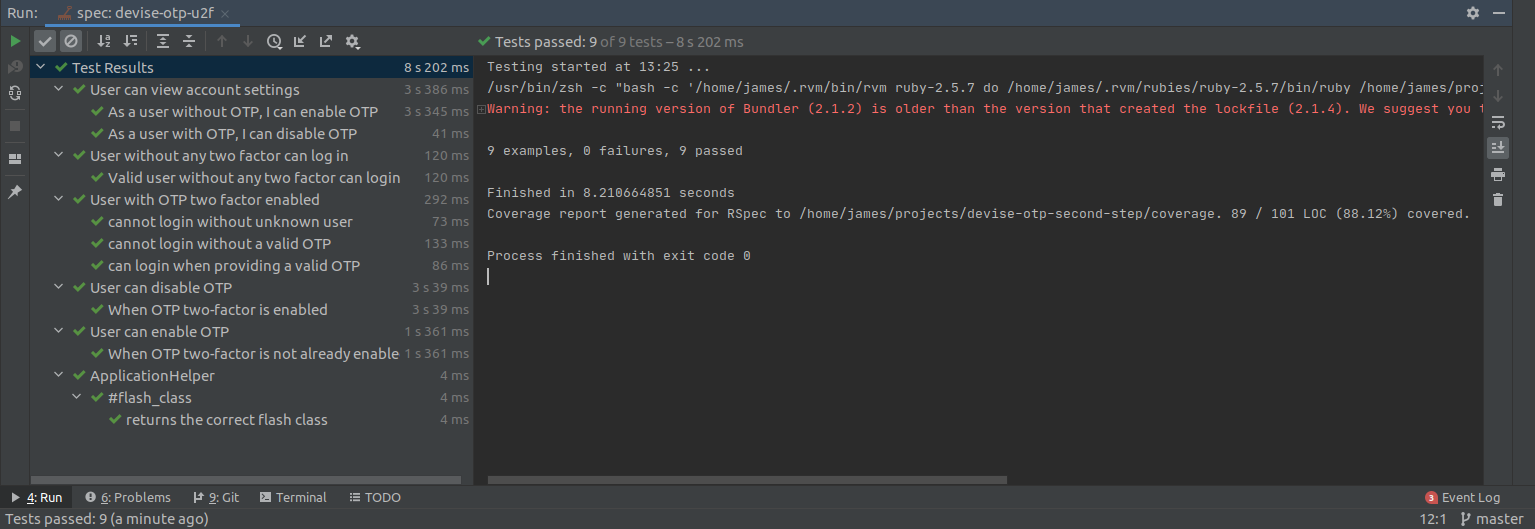

Test can fall into a number of different categories, some of the most common are:

- Unit Tests

Testing individual units/components of code - Integration Tests

Testing different components/modules of a system work correctly when combined together. Integration tests typical work at a "black box" level – less concerned with the "inner workings" than unit tests (given a specific input, a specific output is expected). - Feature/Functional Tests

Testing an entire slice of functionality of the system against the functional requirements. As with Integration tests, these are also "black box" in nature.

These different types of tests all exist on a spectrum of trade-offs between ease of writing, time to run and functional confidence.

Visualising types of tests in this testing pyramid goes a fair way to explaining why most people think so strongly of unit tests when the subject of testing comes up. A project will typically have more unit tests than any other type of test, as they're the easiest type of tests to write. However, unit tests don't necessarily score very highly when it comes to assessing the functional confidence in the system, as they only ever test components in isolation and never the interaction between different parts – hence the value in Integration and Feature tests.

Whilst Integration and Feature tests give a much higher confidence in the system's fitness for purpose; they take longer to write as the scope of what they test is much broader.

A note on Manual Testing

Testing also isn't just about the writing automated tests either. Manual testing in a Test/QA team can also play a vital role.

With Manual testing, a QA team will run through a series of tests (scenarios) where given specific actions; they expect certain results. These scenarios are often formalised in a test plan. Additionally, a QA team may be responsible for exploratory testing where they have the freedom to explore the system in an attempt to highlight bugs or poor usability.

Tests Serve as Documentation

It's great working on a well-documented project, but good documentation is hard to write and even more difficult to maintain as a project moves forwards.

Features tests, can form a good way of documenting the behaviour of a system. With documentation you have to make a very conscious effort to keep it up to date as changes are made to the system. Feature tests don't have this same burden in that if the behaviour of the system changes, the tests break, forcing the developer to update the tests.

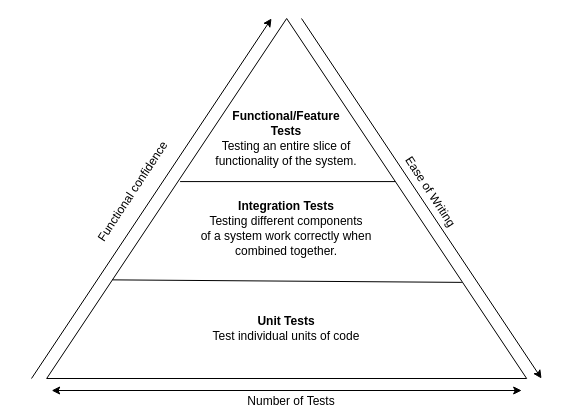

Code Coverage - Use it as a guide, not a vanity metric

Code coverage can be a very useful way of checking how much of your code is tested, and spotting when features are being added without any new tests being written.

If code coverage is used as a vanity metric (e.g. "we must write tests for everything and achieve 95%+ code coverage"), this can emphasis writing tests for the sake of writing tests, rather than writing tests to deliver value.

Delivering value with automated testing is important because writing automated tests involves writing code, and all code that's written has to be maintained and has some kind of maintenance cost associated with it.

A test can add value in a number of ways, a few simple examples would be:

- Testing that the feature delivers against the success criteria

- Testing the logic behind a calculation

Tests that arguably add limited value could be a test testing that:

- A button is a particular colour

- A method always returns the same constant value when the method itself contains no logic.

I have often found that code coverage in the region of 80-90% can be strike a sensible balance. High enough to catch people who add features without any tests, but low enough that you're not having to test every line of code just to satisfy a metric.

We Don't Have Time To Write Tests

We've all probably heard this at some point, and if you haven't, it's probably only a matter of time before you do.

Meeting deadlines is a natural part of software engineer. At some point the project has to be released into the wild. In every scenario where I've heard that these isn't time for testing, it has always been when the deadline is tight, and someone believes that time can be saved by not writing tests.

Blindly agreeing to work in an environment where testing should be sacrificed has a few drawbacks:

- Those making the argument of "We Don't Have Time" probably don't understand the value of testing. Justifying time for testing is likely to always be a struggle.

- Avoiding writing tests means that your end users become the testers, and you will only find defects once your code has shipped to production, and who knows how many other features have been built on yet-to-be-discovered defects.

- It is broadly accepted that fixing issues closer to the point in time where they were introduced is cheaper than fixing them later. This is for a number of reasons. The developers won't have forgotten the context of the newly written code and won't have to re-familiarise themselves with the problem space. And fixing issues is much easier when new features haven't been written on top of buggy code, as other defects and side effects may emerge.

- Any regression testing will have to be manual, which can impact on your agility to ship code, as regression requires people resources for manual testing. (I'll assume you have a QA team and it is at least someones job to write a manual regression test plan).

When you try and fight the the standpoint of not having time to write tests, a common counter-argument is "we can write the tests later" – which is often a poorly placed assumption.

As I point out earlier in this post, writing tests can help identify quality issues in software design. You can only easily retrofit tests, if you're writing good quality, testable code, otherwise you're building on bad foundations and a lot of refactoring will ensue. I'm yet to work on a project where retrofitting tests is as easy as writing them inline with feature development from Day 1.

Anecdotally, project managers and other stakeholders involved in running a project are proficient at assessing and estimating the time that goes into different tasks in the delivery phase of the project. Once a project is shipped, most stakeholders often neglect to monitor the time utilised for addressing bug fixes and defects.

Whilst writing no tests might make your delivery timeline fit to your deadline, this likely comes at the expense of frustrated end users working with buggy software and spending much more time fixing defects post delivery which could, ironically, take much longer than if testing had been adopted in the first place.

On the flip side, the real world does introduce constrains that you sometimes have to flex and break your processes to adhere to reality. If you do find yourself in this situation, take some time to think about how these risks could be mitigated if you didn't have time to write tests as you go. For example:

- Assuming there is no QA team, someone writes a manual test plan for regressions and pre-release testing.

- If there is a QA team, developers and QA should meet more frequently to review the test plan to ensure it captures areas that would usually be covered by tests written by the developers.

- How frequently can you manually regression your project within the time constraints? The more frequent the better, to prevent building on top of buggy code.

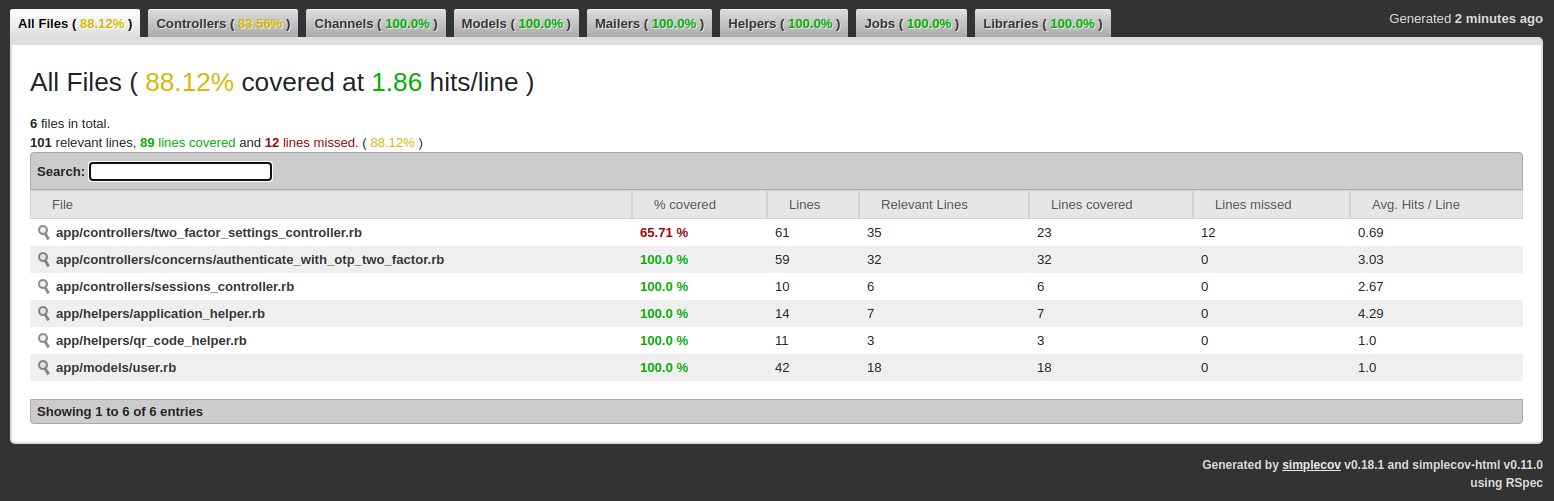

Testing Your Code

Automated testing for most projects revolve around a few basic building blocks:

- Testing Framework

A testing framework is responsible for running the tests and aggregating the results together. The testing framework will define how you write tests. Most languages have a handful of popular and well-maintained testing frameworks.

For example: JUnit for Java, Rspec for Ruby, pytest for Python, etc. - Assertion Libraries

All testing frameworks include a way of being able to write assertions (what value you expect certain variables/outputs to have). However, you may find that a third-party library may provide more powerful or intuitive assertions. For example: JUnit is a testing framework for Java, and AssertJ is an assertion library that provides many more assertions out-of-the-box than JUnit does. - Mocking, Stubbing and Spying

The difference between Mocking, Stubbing and Spying is a topic in an of itself. In short, mocking, stubbing and spying can be very useful for building fake objects, returning a fake response from a method, or inspecting how a method or object has been interacted with. Pick a mocking/stubbing/spying library for your programming language/testing framework and learn it well if you plan on taking testing seriously, it will pay its dividends in no time. - Test Fixtures

A "Test Fixture" is a piece of code that is responsible for ensuring that a test environment is setup correctly. For example, you may have a test fixture that is responsible for populating a test database with necessary data for your tests. Sometimes test fixtures might need to run before every test, or before the entire test suite (this varies based on the needs).

Understanding how to write test fixtures using your testing framework will cut down on the effort involved in writing each individual test, as well as reducing setup boilerplate.

The specifics of writing automated tests goes well beyond the scope of this blog post. If you're new to testing, these are areas that you'll likely want to read up on in more detail. Most testing frameworks and libraries worth learning have extensive documentation and examples.

Conclusion

Testing is a huge area of software engineering, and as a result testing is more than just writing unit tests and delivers value beyond just having a number of tests to show for it

Testing is ultimately a driver for productivity and quality. Quality is increased, both from a functional fit-for-purpose perspective where users are exposed to fewer and few bugs and from a software quality perspective where the underlying codebase is typically better designed than if it has been written without tests.

It's also easier to be more productive on a project with tests as the tests document the current behaviour of the system, and allow you to change the system with confidence that the test suite will catch any regressions in functionality. And the more automated the testing process, the lesser the burden on manual testing and the lesser the friction on getting changes out into production more rapidly.